探讨下Nginx 502 504错误原因

文章目录

502、504 状态码是Nginx常见的两种错误码,但你未必完全知道它俩的含义,本文将分析源码并抓包来详细分析下这俩错误码。

通常遇到502、504 发生在Nginx作为代理服务器请求上游服务器失败时,为了便于理解下边分析过程,正始开始之前有必要先来回顾下Nginx Upstream模块的流程,关于这部分详细可以移步Nginx Upstream流程分析。

有了这层框架后,下面我们进入正题。

源码分析

先来看下负责和上游服务器连接的ngx_http_upstream_connect函数

|

|

通过源码可以看到当创建与上游服务器连接的socket失败后,会带着NGX_HTTP_UPSTREAM_FT_ERROR这个错误类型重试与下一个上游服务器建连。

接下来看下ngx_http_upstream_next函数,分析重试逻辑

|

|

这里已经可以清晰得出:

当遇到错误需要终止请求时,除了几种明确的错误类型外,默认返回502错误码。

上一步socket创建失败时的NGX_HTTP_UPSTREAM_FT_ERROR不属于几种明确错误类型自然也就返回502。

同时,通过这几行代码也能看到504错误属于已明确的错误类型的其中之一。

|

|

想知道什么情况下会返回504,就得分析什么情况下会返回NGX_HTTP_UPSTREAM_FT_TIMEOUT和NGX_HTTP_UPSTREAM_FT_HTTP_504类型,于是我们查找代码发现主要有两处:

- ngx_http_upstream_send_request_handler 发送请求

- ngx_http_upstream_process_header 处理header

它们正好也是上图中peer.get 到 ngx_http_upstream_next 之间的流程部分,其实如果不用反查代码话,正向分析话,我们也应该分析这些流程部分。

ngx_http_upstream_send_request_handler

|

|

ngx_http_upstream_process_header

|

|

此时可以小结下:

- Nginx在给上游发送请或读取header超时会返回504;

- 与上游建连失败时会返回502错误;

注意下,此结论仅针对以上分析过的逻辑,显然它不是全部,由于篇幅有限不可能列出全部代码,实际上在给上游发送请求或请取header的过程中都有可能遇到错误(比如上游发RST)而结束请求并返回502错误。总之上边的分析是方便我们快速理清整个框架。

接下来看下nginx中有关反向代理超时的配置,

反向代理超时参数

|

|

可以看到proxy_read_timeout、proxy_send_timeout 对应上边我们分析的两种超时情况。

proxy_next_upstream_timeout 作用于ngx_http_upstream_next 函数中重试超时时间。

proxy_connect_timeout 上游连接超时时间,它是通过 ngx_add_timer添加定时器实现的,这里我们不作分析,后边会测试这种情况。

实验验证

模拟一个响应慢的上游server

|

|

nginx 配置

|

|

Curl 模拟客户端

|

|

看下Nginx错误日志

|

|

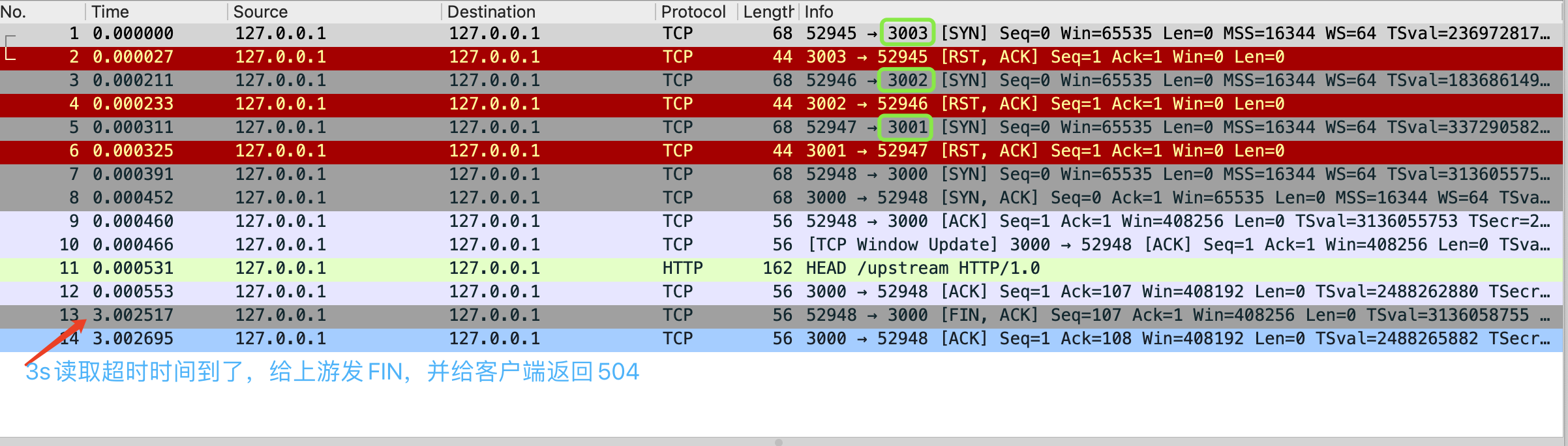

抓包看下

从图中可以获取几点信息:

-

Nginx默认使用

wrr负载均衡算法选取上游节点,而不是顺序选择的; -

因为

proxy_next_upstream_timeout和proxy_next_upstream_tries都设置为0,不限制重试次数和时间,Nginx会尝试请求全部上游节点; -

对照图分析下过程。

由于3001-3003端口是不存在的,在连接握手阶段

SYN包发出后立马收到RST包,连接报错,又因为proxy_next_upstream error timeout;设置了遇到错误和超时会重试,所以Nginx会尝试请求下一个节点,直到走到3001端口时,服务响应比较慢,proxy_read_timeout 3;3秒后读取超时时间到了,这时已经没有可重试的节点了需要立即返回,客户端收到504状态码;

因为选择上游节点的顺序是随机的,实际上边的测试返回结果不是固定返回504的,也可能会返回502。

|

|

什么情况下会返回502呢?

当最后一次重试落到3001-3003其中一个时就会返回502。

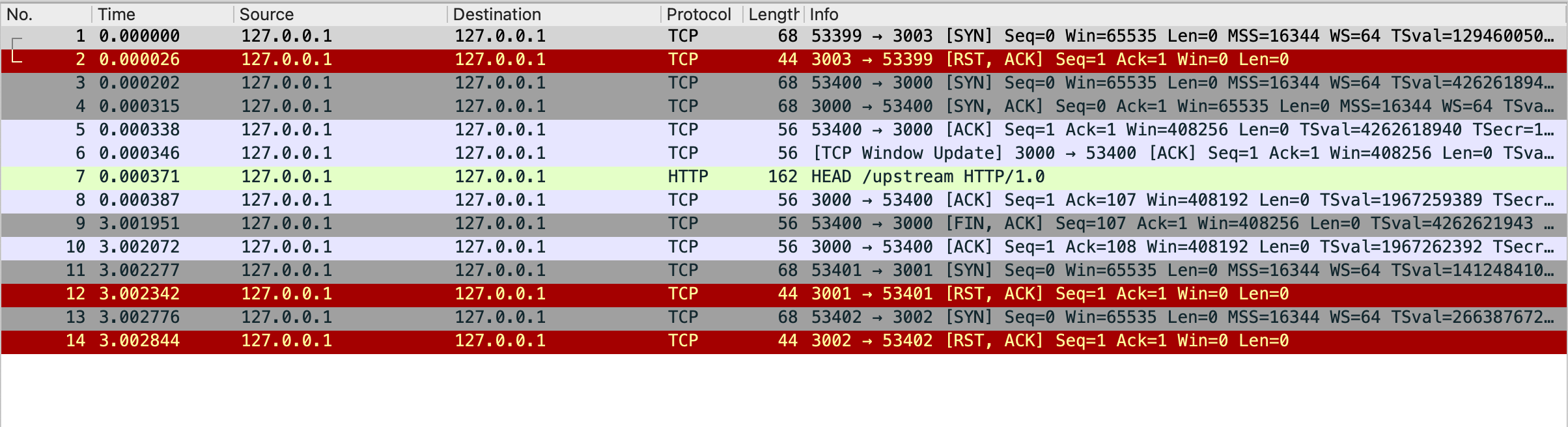

再次抓包验证下

分析下过程,先请求3003端口,连接失败,再请求3000,连接成功,3s过后读取超时,尝试3001端口,连接失败,尝试请求3002端口,连接失败,已经没有可尝试的节点了,返回502状态码。

Nginx错误日志

|

|

同样能看到重试过程。

连接超时

上边介绍超时参数时提到过连接超时参数proxy_connect_timeout,它设置TCP握手超时时间,同样做个小测试方便理解这种情况。

nginx配置

|

|

curl 模拟客户端请求

|

|

日志

|

|

详细打印了错误原因,连接上游超时。

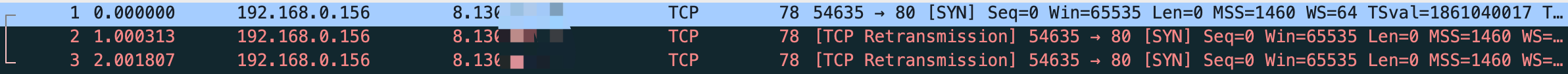

抓包

因为防火墙的阻止,迟迟收不到 SYN 包,3s后超时时间到了,返回给客户端504。

如果把proxy_connect_timeout设置的足够大又会怎样呢,还会返回504吗?

修改下nginx配置

|

|

再次用curl看下

|

|

返回502,不是504了

错误日志

|

|

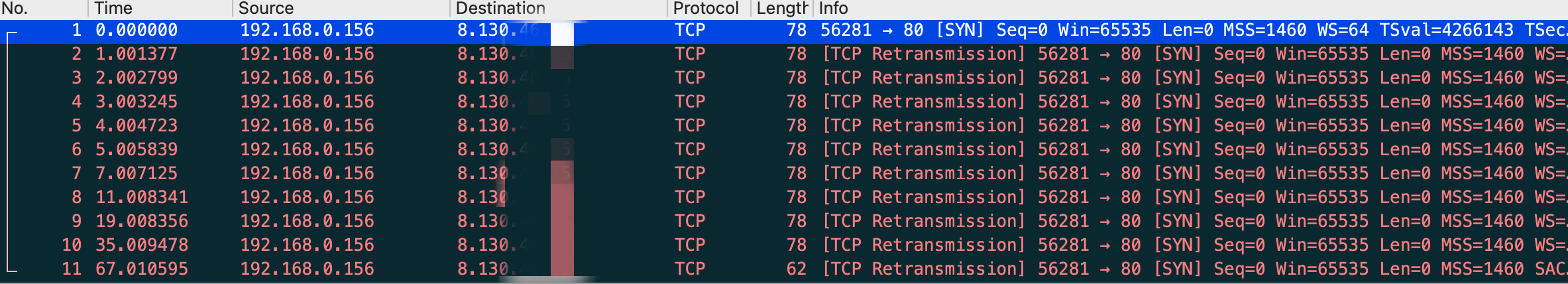

抓包

分析下原因:

因为收不到ack包,系统会尝试重发sync包,直到最大次数不再尝试通知上次socket连接超时,又因为proxy_connect_timeout设置的足够大,并没到达超时时间,所以并没有返回504,而是502。

PS:因为不同平台 sync重试次数,间隔时间不太一样,所以总的重试时间也可能会大于proxy_connect_timeout设置的时间,此时就不会再是返回502了,而是504。

ps: 上边测试结果基于macos系统。

总结

-

当配置了

proxy_connect_timeoutproxy_read_timeoutproxy_send_timeoutproxy_next_upstream_timeout

上边四种超时参数后,到了超时时间后Nginx便会返加504错误;

-

502更像是请求失败的超集,504是它的子集,特指连接超时导致的请求失败这一具体情形。

以上分析只是抛砖引玉,并不够全面,没有面面具到可能引起502,504的所有情况,具体遇到时可以通过查看错误日志辅助定位问题。

文章作者 XniLe

上次更新 2024-01-11